AI can write entire blog posts in seconds, but that speed comes with a cost: accuracy. Misinformation can slip through, and most people won’t notice until it’s too late.

This guide breaks down how to fact check AI content properly, what to look out for, and how to avoid publishing errors that could damage your credibility.

Why You Can’t Fully Trust AI Content Out of the Box

Even the most advanced AI models can get things wrong. They’re fast, convenient, and sometimes shockingly articulate, but none of that guarantees factual accuracy. If you’re treating AI-generated content like a final product, you’re risking the spread of false claims, fabricated sources, and misinterpretations.

This section breaks down the biggest reasons why blindly trusting AI output is risky business.

AI models aren’t sources; they’re synthesizers

AI doesn’t “know” things. It pieces together information from patterns in its training data. That data may include reliable sources, but it also includes outdated websites, misinformation, and obscure forums. When AI generates a sentence, it’s not citing a verified source. It’s averaging a guess based on its input.

This is why tools like ChatGPT sometimes invent academic studies, articles, or experts that don’t actually exist. It’s not lying. It’s predicting what a study should look like based on patterns in past training. That distinction matters.

Speed comes at the cost of accuracy

AI’s biggest appeal is how quickly it can generate paragraphs, outlines, even full articles. But that speed often sacrifices depth, context, and proper attribution. Just because a sentence sounds polished doesn’t mean it holds up to scrutiny.

What makes this worse is how convincingly wrong AI can be. It writes with such fluency and confidence that most readers assume the information must be correct when in reality, the facts may be completely off.

Bias isn’t always obvious, but it’s always there

AI reflects the data it’s trained on, and that data is full of bias. This includes:

- Cultural and racial bias embedded in historical data

- Political slants in news content

- Skewed gender representation

- Tech-industry perspectives dominating certain topics

These aren’t always visible on the surface. But they shape how AI responds to prompts, subtly tilting content in a particular direction. If you’re not actively checking for it, biased information can pass as neutral fact.

Most Common Errors in AI-Generated Content

AI can produce text that feels polished and intelligent, but underneath the surface, errors are often baked in. These aren’t always easy to catch on a quick read, especially when the tone feels authoritative. But if you’re relying on AI to write for your brand, blog, or campaign, these mistakes can quietly cost you trust and credibility.

Here are the most common factual errors to watch for and why they happen.

Fabricated sources or studies are more common than you think

AI has a tendency to invent research that sounds legitimate. It may generate the name of a journal, slap a date on it, and even fabricate a believable-sounding title. But when you try to look it up? Nothing.

This usually happens when you ask the AI to back up a point with a citation or academic reference. It understands the format of a citation, but not the verifiability of the information behind it.

What to do:

If an AI-generated article includes a claim followed by a source, always plug that citation into Google or a scholarly database. If the link doesn’t exist, or leads to something unrelated, you’re dealing with a hallucination.

Misquoted or misattributed statements slip through easily

AI pulls from a sea of public data. Sometimes it mixes things up, attributing a quote to the wrong person or combining parts of different quotes into one. It might also paraphrase a quote and change its original meaning.

Even high-profile figures like Einstein or Martin Luther King Jr. are often attached to quotes they never actually said. AI picks up on this common internet mistake and repeats it.

Why it matters:

Misquoting someone, especially in professional or journalistic content, damages your credibility and can even lead to legal issues depending on the context.

Outdated or misleading statistics can sound current

AI models are often trained on datasets that stop at a certain cutoff date. Even when updated, they don’t always know what’s recent. So if you ask for the “latest” data on a trend or industry stat, the AI might give you something from 2019, and phrase it as if it’s current.

Watch for:

- Economic data like GDP, unemployment rates, market trends

- Medical or scientific stats

- Technology adoption figures

- Social media user counts

To confirm any stat, go directly to source databases like Statista, Pew Research, government portals, or industry reports.

Oversimplified explanations lead to false equivalence

AI is trained to be helpful. That often means reducing complex topics into digestible summaries. But sometimes, in making things “easier to understand,” it strips away nuance.

For example:

- It might suggest there’s equal debate on topics where there’s overwhelming consensus.

- It may frame both sides of an issue as equally valid when one is rooted in misinformation.

This is especially dangerous in fields like climate science, medicine, or history, where simplification can warp public understanding.

Context stripping changes everything

AI doesn’t always understand why something matters. It just knows it came up in similar contexts. So it might mention a stat, quote, or event without including what came before or after. That missing context can completely change how a reader interprets the information.

Examples of stripped context:

- Quoting a CEO out of a longer conversation where their meaning was different

- Presenting a statistic without noting the margin of error or how the data was collected

- Summarizing a news story while omitting key facts that affect public perception

When reviewing AI output, always ask: Does this tell the whole story? Or just the part that fits a certain angle?

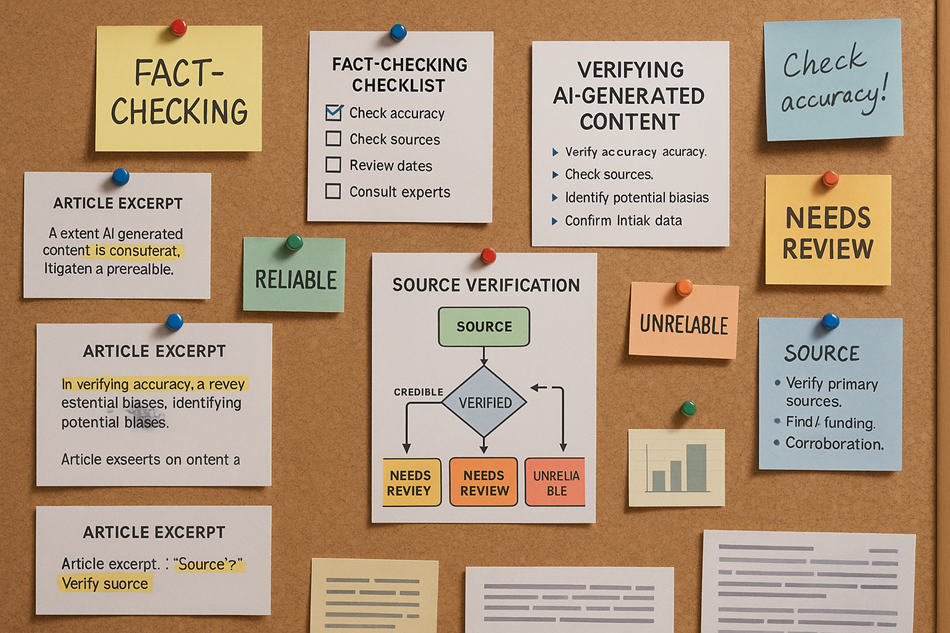

The 3-Step Process to Fact Check AI Content

You don’t need a journalism degree to verify what AI spits out. What you do need is a method: a simple, consistent process that helps you catch mistakes before they reach your audience. This three-step system is designed to help you quickly vet AI-generated content, even if you’re not a subject matter expert.

Step 1 – Identify all claims that require verification

Not every sentence in a piece of content needs to be fact-checked. But the moment an AI tool mentions a number, name, event, or cause-effect relationship, it’s time to slow down.

Here’s what to look for:

- Statistics: Any mention of percentages, figures, or rankings.

- Historical references: Dates, timelines, or events tied to specific people or movements.

- Quotes: Especially those attributed to public figures or experts.

- Scientific or medical claims: Even basic health statements need confirmation.

- Legal, political, or economic analysis: These are often oversimplified or outdated.

Flagging these in your draft, either by highlighting or commenting, will save you time in the next step.

Step 2 – Cross-verify with reputable sources

Once you’ve marked claims worth checking, the next step is to find a trustworthy source that supports or corrects them. This doesn’t mean clicking the first result on Google. It means digging one layer deeper.

Here’s where to look:

- Government and public institution websites: (.gov, .edu, national statistics bureaus)

- Peer-reviewed journals and databases: Google Scholar, JSTOR, PubMed

- Major news organizations: Especially those with fact-checking standards

- Direct sources: Press releases, interviews, financial statements, or original research

- Fact-checking platforms: Snopes, PolitiFact, FactCheck.org

And here’s the key: always open the source itself. Don’t rely on summaries or secondhand interpretations. Skim through the actual document or page to confirm the information in context.

Step 3 – Replace, revise, or remove

Once you’ve verified (or debunked) a claim, your last job is to make a judgment call:

- If the fact is solid, rewrite the sentence to include the actual source or clarify the data.

- If it’s partially true, tweak the phrasing. Make sure it reflects what the source actually says, not what the AI assumed.

- If it’s completely off, cut it. Don’t try to salvage a point that doesn’t hold up under scrutiny.

And here’s a bonus tip: if a stat or quote is strong, link to the original source (if appropriate). This builds trust and gives your content more authority.

What Makes a Source Trustworthy in 2025?

Not all sources carry the same weight. Some sound official but are full of bias. Others look slick but crumble under closer inspection. When you’re fact-checking AI content, knowing which sources to trust is just as important as catching the errors.

This section breaks down how to judge credibility in a time when content can be manufactured in seconds.

Authoritative institutions have lasting credibility

The most reliable information often comes from institutions that operate under rigorous standards. This includes government bodies, academic institutions, and research organizations that follow strict protocols and transparency rules.

Examples:

- U.S. Census Bureau, CDC, FDA

- Universities with published research databases

- International bodies like WHO, IMF, or UN

These sites don’t chase clicks. They’re focused on public record, data accuracy, and long-term accessibility, which makes them reliable anchors in a noisy online world.

Not all media outlets are equal

Some news organizations prioritize investigative depth. Others rely on aggregation or SEO-driven content. AI doesn’t distinguish between the two, it treats them as equals.

When verifying a claim through media sources, ask yourself:

- Has this outlet won awards for journalism or fact-checking?

- Do they cite their own sources transparently?

- Do they have a reputation for retractions or misreporting?

Trusted outlets like Reuters, BBC, NPR, or Associated Press have newsroom standards that help reduce error. Many smaller, niche publications also hold high standards, but it’s worth checking their editorial practices before treating them as definitive.

Fact-checking sites are your fastest shortcut

These platforms exist solely to investigate claims. They publish transparent explanations about what’s true, what’s misleading, and what’s outright false.

Reliable ones include:

- Snopes – Great for viral internet content and memes

- PolitiFact – Excellent for U.S. political statements and campaigns

- FactCheck.org – Run by the Annenberg Public Policy Center, known for nonpartisan coverage

- Full Fact (UK) – Covers politics, health, and misinformation across the UK

The key is to read why something is rated false, not just take the label at face value.

Modern tools help catch subtle red flags

There are AI-powered tools built specifically to flag questionable claims and detect AI-generated misinformation. These aren’t perfect, but they’re great for speeding up the first pass of content review.

Examples worth exploring:

- AdVerif.ai – Designed for ad networks, it flags fake news and misinformation

- NewsGuard – Browser extension that rates website trustworthiness

- Originality.ai – Detects AI-written content and can flag false citations

- SciCheck – A science-focused branch of FactCheck.org

Use these tools as signals not final judges. They’ll help you prioritize what needs deeper checking.

How AI Content Fact-Checking Differs by Medium

Fact-checking isn’t one-size-fits-all. The way you verify a blog post isn’t the same as how you handle a video script or a tweet. AI content takes different forms, and each format comes with its own challenges when it comes to spotting and correcting factual errors.

Here’s how to adjust your approach depending on what kind of content you’re reviewing.

Blogs and articles often look polished, but need careful vetting

Long-form written content is usually the easiest to fact-check, but also the easiest to trust too quickly. When AI writes an article, it mimics human tone, structure, and pacing so well that most readers won’t second-guess it.

What to watch for:

- Fabricated citations that look real at first glance

- Statistics presented without source links or publication dates

- Oversimplified takes on complex subjects, framed as expert insight

Tip: Read AI-written blog posts with a pen in hand (or a comment tool open). Mark every statement that sounds like a fact and confirm whether it actually is one.

Video scripts require transcription before checking

With video content, the first challenge is the format itself. You can’t skim a video the same way you skim text. To fact-check a video script generated by AI, you’ll need the written version. If one doesn’t exist, transcribe it using a tool like Otter.ai or Descript.

Once transcribed, apply the same process:

- Highlight all factual claims

- Check dates, names, and quotes for accuracy

- Watch out for vague attributions like “studies show” or “experts say”

These are red flags. If the script can’t back them up with something specific, they’re empty filler.

Infographics and visual content hide errors behind design

Visual content can make stats and claims feel bulletproof because they look official. But when AI is involved in generating chart data or choosing visual examples, mistakes often slip in.

Things to double-check:

- Numbers that don’t add up or contradict each other

- Pie charts or graphs that visually exaggerate trends

- Misleading labels, especially in timelines or comparisons

Important: Don’t assume a statistic is accurate just because it’s paired with a nice icon or pulled from a “top 10” list. Check the original source behind the stat.

Social media content spreads fast and needs fast checking

AI-generated captions, quotes, and snippets are often designed for viral appeal. But that’s exactly what makes them risky. A single inaccurate claim in a tweet or LinkedIn post can snowball into a reputation hit.

Here’s how to handle short-form AI content:

- Use reverse image search for visual claims (e.g., Google Lens or TinEye)

- Cross-reference quotes with a known database (e.g., Wikiquote or direct interviews)

- Avoid resharing until you’ve confirmed every fact, even if the content aligns with your message

Virality is no excuse for skipping verification.

When to Use Human Editors vs. Fact-Checking Tools

Fact-checking AI-generated content doesn’t mean choosing between people and tools. The most efficient approach combines both. Each has strengths the other doesn’t, and knowing when to rely on human judgment versus automation can save you time while improving accuracy.

This section shows you when to use which and how to combine them for a smarter workflow.

What human editors catch that tools miss

AI tools are fast. But they’re still scanning based on patterns and probability. Human editors, on the other hand, pick up on nuance, things that don’t show up in a technical scan.

Humans can:

- Sense when something sounds off, even if it’s technically correct

- Catch misleading phrasing or bias that’s subtle but damaging

- Detect context issues, like when a stat is true in one country but not another

- Rewrite clunky or overly confident claims without losing tone or clarity

Example: An AI tool might flag that a stat exists. A human will ask, does this stat actually support the point being made?

When tools save time and catch the first layer of issues

Automated fact-checking tools are ideal for high-volume content, especially if you need to scan dozens of claims across multiple pages. They’re also great for flagging content that might be problematic even if they can’t explain why.

Use tools when:

- You need to check for potential AI hallucinations at scale

- You want to verify originality alongside factual accuracy

- You’re reviewing content in unfamiliar industries or subjects

- You’re scanning content created by freelance writers or external agencies

Good tools to try:

- Originality.ai (flags AI use + factual inconsistencies)

- Grammarly’s plagiarism + citation checker

- AdVerif.ai (real-time misinformation detection for ads and content)

- Google Fact Check Explorer

How hybrid workflows speed up the process without sacrificing quality

The best strategy isn’t either-or—it’s both. Start with tools to catch the surface-level red flags, then bring in human editors for deeper review and final judgment.

Here’s a sample workflow:

- Run AI content through detection + verification tools

- Flag any citations, claims, or suspicious phrasing

- Flag any citations, claims, or suspicious phrasing

- Have a human review the flagged sections

- Decide whether to revise, replace, or remove

- Decide whether to revise, replace, or remove

- Use collaborative editing tools (like Google Docs or Notion)

- Allow for internal comments, context, and version tracking

- Allow for internal comments, context, and version tracking

- Optional: Bring in a subject-matter expert for technical content

- Especially for legal, medical, or financial topics

- Especially for legal, medical, or financial topics

This approach keeps your workflow lean while protecting your reputation.

Building a Fact-Checking Culture in Your Team or Business

Fact-checking can’t be something you tack on at the end. If you’re serious about accuracy, it has to be part of how your team operates from the start. That means creating a culture where everyone – writers, editors, marketers, even interns – understands the value of getting the facts right.

Here’s how to make accuracy a built-in habit, not a last-minute fix.

Fact-checking reinforces brand trust

When your audience reads your content, they’re not just absorbing information. They’re deciding if they trust your voice. And once you lose that trust, even from a single error, it’s hard to get it back.

Accuracy doesn’t just protect your credibility. It boosts your content’s performance. Search engines reward trustworthy information. Journalists and partners are more likely to cite you. And customers feel safer engaging with your brand.

You don’t need to be a newsroom to earn trust. You just need consistency.

Create internal SOPs for verifying claims

A team-wide standard makes the difference between “We probably fact-checked this” and “We definitely did.” When everyone follows the same system, it becomes second nature.

Here’s what that could look like:

- Highlight all factual claims in the draft (stats, quotes, names, numbers)

- Verify each claim using pre-approved sources or research tools

- Add comment notes if a source link was used or if clarification is needed

- Have a second pair of eyes review final drafts before publishing

- Use version tracking to log major factual edits

You can keep it simple, but it has to be clear. Don’t leave fact-checking up to personal judgment or assumptions.

Train your team to recognize red flags

Not everyone on your team needs to be a researcher, but they should all be trained to pause when something feels off. A quick internal workshop or onboarding module can go a long way.

Teach them how to spot:

- Claims without any supporting context

- Quotes that seem too perfect or generic

- Stats that don’t cite a source or sound exaggerated

- Overly confident takes on controversial or complex topics

Give your team examples. Show them the difference between solid, supported writing and AI-generated content that sounds smart but collapses under scrutiny.

The more familiar they are with these patterns, the less likely inaccurate content will slip through.

How Trelexa Helps You Create Verified, Authoritative Content

You don’t need a full-time fact-checker to publish content you can stand behind. At Trelexa, we combine real editorial oversight with smart internal tools that flag shaky claims before they go live.

Whether it’s for a campaign, article, or client deliverable, we make sure the information you put out isn’t just compelling. It’s accurate, sourced, and trustworthy. That’s how we help you build long-term credibility in a fast-moving, AI-saturated world.

Final Thoughts

AI is changing how content gets made, but it hasn’t changed what readers expect. People still want truth. They still want clarity. And they still make decisions based on what they read, watch, or share.

That’s why fact-checking can’t be optional. When AI gives you a first draft, it’s your job to give it a second look. That means checking the numbers. Rethinking the quotes. Removing what doesn’t hold up.

The tools are there. The workflow is manageable. And the payoff is lasting trust, something no algorithm can generate for you.

So don’t treat fact-checking as a chore. Treat it as your edge.